AI models are more likely to provide incorrect information than admit ignorance. This claim is supported by a study published in the journal Nature.

Artificial intelligence often gives confident answers, even when they are wrong, as it has been trained to trust the information it processes. Researchers note that models do not recognize their limitations.

While larger models tend to perform better on complex tasks, this does not guarantee accuracy, especially in simpler situations.

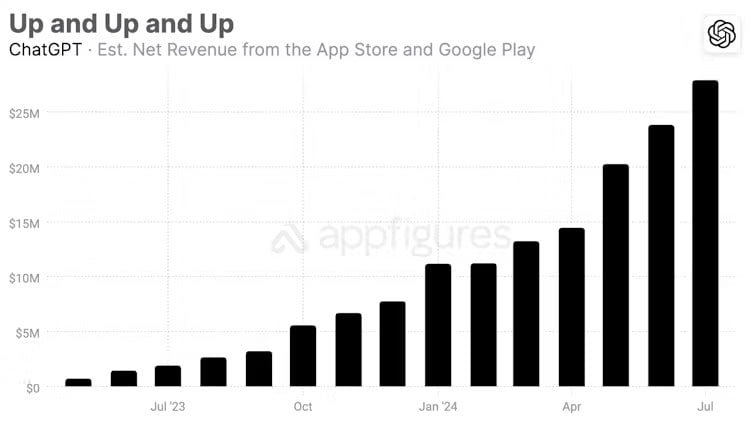

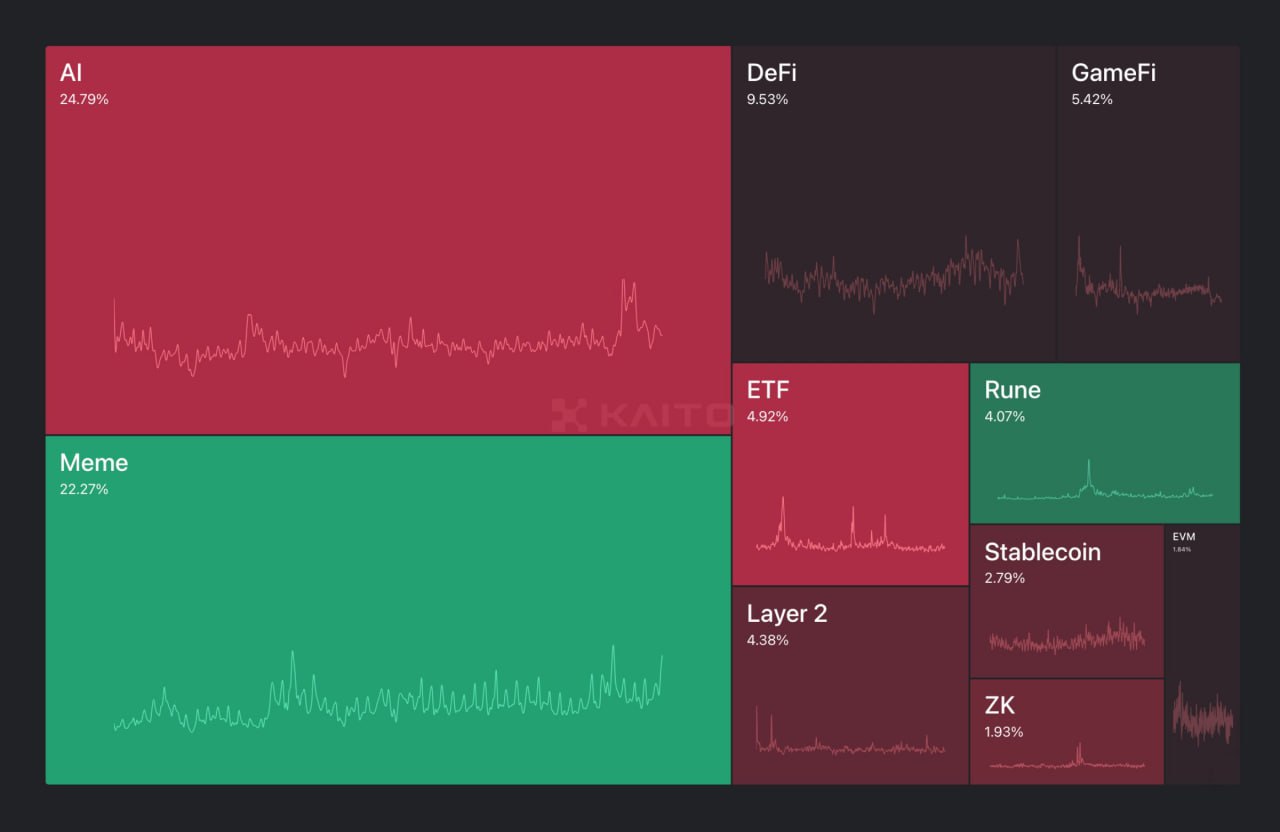

They are less likely to avoid difficult questions, opting to try to solve them, sometimes giving incorrect answers. The chart below shows how models more often make mistakes (in red) rather than acknowledge incompetence (in light blue).

Researchers emphasize that this phenomenon is not due to the models’ inability to handle simple tasks—they are simply trained to solve complex problems. Neural networks trained on massive datasets may lose basic skills.

The problem is exacerbated by the confidence AI displays in its answers. It is often difficult for users to determine when the information is accurate and when it is false.

Moreover, improving a model’s performance in one area can negatively affect its performance in another.

“The percentage of answer avoidance rarely exceeds the rate of errors. This shows a decline in reliability,” the researchers conclude.

They also highlight the shortcomings of modern AI training methods. Fine-tuning with reinforcement learning and human feedback worsens the issue, as the model is not inclined to avoid tasks it cannot handle.